Hi, I’m Arne Van Gestel and from 2nd March 2020 till 29th May 2020 I was an intern at JIDOKA, my internship assignment was the research of augmented reality. But what is augmented reality? Wikipedia says the following: “Augmented reality of AR is a live, direct or indirect, image of reality to which elements are added by a computer”. With the use of a computer (or in the case of my internship: a smartphone) virtual elements are added to the real world. Augmented reality is a relatively new technology that is nowadays used more and more. But it’s mainly used for games, like Pokémon GO, and not for professional applications.

So my internship assignment is to investigate this new technology and see if it could be used in a professional environment. To be able to explore and demonstrate the possibilities of augmented reality, I developed a demo application during my internship. This app serves to demonstrate the possibilities of AR and to sketch some examples of how AR could be used to achieve different goals.

Usually, augmented reality is implemented on a smartphone because modern smartphones are powerful enough and sufficiently available. Specific hardware is available for AR applications such as Microsoft’s HoloLens. But this kind of hardware is expensive and not as available as a smartphone.

There are several possibilities to implement augmented reality on smartphones. My internship is focused on ARCore, developed by Google. ARCore is mainly developed for use on Android, so this is the operating system I will be working in. The programming language of the application will be Kotlin. A supported item of software that is usually used together with ARCore is Sceneform, also developed by Google. Sceneform will be responsible for rendering the objects that will be placed in the real world.

Because all the mentioned technologies are new to me, I spent the first two weeks taking courses from Kotlin, discovering Android development and the possibilities of ARCore and Sceneform. In the beginning of the second week I drew up a plan of approach in which I planned the rest of my internship and set several deadlines. In this plan I also described the technologies that will be used and the requirements that the app will fulfill.

After preparing the plan of action, I found a sample app online. This app demonstrated some of the possibilities of ARCore and is open source. I used this example app as a starting point for my demo app. Unfortunately, these two weeks were also the only weeks I was able to spend at the Hasselt office because of the Corona crisis.

After my first two weeks of internship the lockdown began so I had to work from home from now on. Luckily for me this went quite smoothly. The daily meetings now took place online so there was still good communication with my colleagues. For me personally not much has changed outside the work environment during the lockdown.

The following weeks were spent implementing new features, fixing bugs, developing 3D models for use in the app and more bug fixes. ARCore and Sceneform have good documentation, but the number of debugging tools is limited, making it sometimes difficult to investigate problems properly. After I implemented some features it was time to bring everything together into a coherent unit.

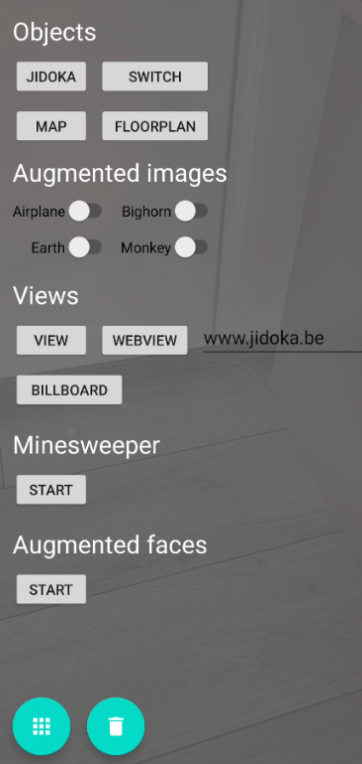

At first, all the different parts of the application were divided into categories that were implemented separately from each other, but this is not ideal for a demo application. That’s why in the end I combined all parts into one unit in which the different features are available in a menu.

Of course, the development of the application did not go smoothly. Apart from the relatively large number of small bugs that were easy to solve, there were also a number of larger problems that were not all solved. A first problem is the keyboard. When the keyboard of a smartphone is activated from an AR environment, nothing happens. This is a problem with ARCore that has been known for a long time and has no solutions (at the time of writing).

Another part I’ve had a lot of problems with is augmented images. Augmented images is a term that Google uses to recognize and track images. This means that I can program a specific image, for example a picture of the earth, to be recognized by the app and that I can perform different actions on these images. For example, I can recognize an image of the earth and replace it with an image of Mars. During a meeting the question arose whether these images can be made dynamic. This means that the users themselves can add images that are recognized by the app. In theory this is possible, but in practice this turned out not to be the case.

The images that need to be recognized are stored in an ‘augmented image database’. This database can be set up in advance with a separate tool or can be created in the application. It is possible to create an empty database and add images manually. But this did not work correctly or reliably in any scenario. What certainly doesn’t help is the fact that this augmented image database has virtually no debugging tool, which makes it extremely difficult to troubleshoot problems with this database. In the end, I declared this impossible with the time and tools I had available.

Despite the Corona crisis and the problems I encountered during my assignment, I enjoyed my internship a lot. It was very interesting and educational.